How do Databases do Storage and Retrieval?

Chapter 3 of Designing Data Intensive Applications: Storage and Retrieval

Welcome to the 10% Smarter newsletter. I’m Brian, a software engineer writing about tech and growing skills as an engineer.

If you’re reading this but haven’t subscribed, consider joining!

In past posts, we’ve covered the data modeling side of databases. This post will give an overview of the internal storage and retrieval in databases. Let’s start by compare two different storage engines:

Log-structured storage engines (log structured merge trees)

Page-oriented storage engines (b-trees)

Log-Structured Storage Engines

To start, we’ll start with some definitions:

A log is an append-only sequence of records. A record is a key, value pair to store and access data.

Indexes are data structures to speeds up reads and find a record. Well-chosen indexes speed up read queries but every index slows down writes.

In a log-structured storage engine, we index records, using an in-memory key-value store, also known as a hash map.

Additionally, we keep records in an append-only log file. Then wouldn’t we run out of disk space? We solve this by breaking the log into segments files of certain sizes. Compaction takes a segment and keeps only the most recent key-value records.

Then we merge them into a new file and the old segment files will be deleted. This process is known as compaction and merging and can be done at the same time. Each segment has its own in-memory hash map and we can read the most recent key-value records from each segment file. This process can be done in a background thread.

This is the basic Log-Structured Storage Engine design. Its properties and considerations are as followed:

Efficiency: both appending and segment merging are sequential writes, which are more efficient that random writes.

File format: binary format.

Deleting Records: we use a deletion record (tombstone) that tells the merging process to discard previous values.

Crash Recovery: if restarted, the in-memory hash maps are lost. You can recover from reading each segment or speed up recovery by storing a snapshot of each segment hash map on disk.

Concurrency Control: this uses a single write thread as writes are appended to the log in a strictly sequential order, but reads can be concurrent by multiple threads as segments are immutable.

Limitations: The hash map must fit in memory and range queries are not efficient.

So far we’ve looked at basic version of Log-Structured Storage Engine, but a modified design known as SSTables and LSM-Trees are used more in practice.

SSTables and LSM-Trees

A Sorted String Table or SSTable is another design that require the sequence of key-value records to be sorted by keys and for each key to appear only once in the merged segment file (compaction already ensures this).

The advantages of SSTables over log segments with hash indexes are:

Merging segments is simple and efficient with mergesort.

You can store fewer mapping keys in memory. The rest are easy to find with a search between indexed keys.

Since reads will scan over several key-value records in a range, we can group these records into a block and compress it before writing to disk.

We sort the data with a red-black tree or AVL tree. This in-memory balanced tree structure is called a memtable. When the memtable get bigger than some threshold (megabytes), we’ll write it to disk as a SSTable. On a read request, try to find the key in the memtable or an on-disk memtables, and then find the record in an on-disk segment file. From time to time, run merging and compaction with a background thread. In a database crash, the most recent writes are lost so write them to a separate on-disk log so we can recover the memtable. Once the memtable is written to an on-disk SSTable, the log is discarded.

Storage engines based on this are called LSM-Tree structure engines (Log Structure Merge-Tree).

LSM-tree algorithm can be slow when looking up keys that don't exist in the database. Bloom filters are used to speed up checking for existence in the database.

Databases that use SSTables and LSM-Trees include:

LevelDB (Google, storage engine for BigTable)

RocksDB (Facebook, open sourced)

Cassandra

Now let’s look at page-oriented storage engines.

Page-Oriented Storage Engines

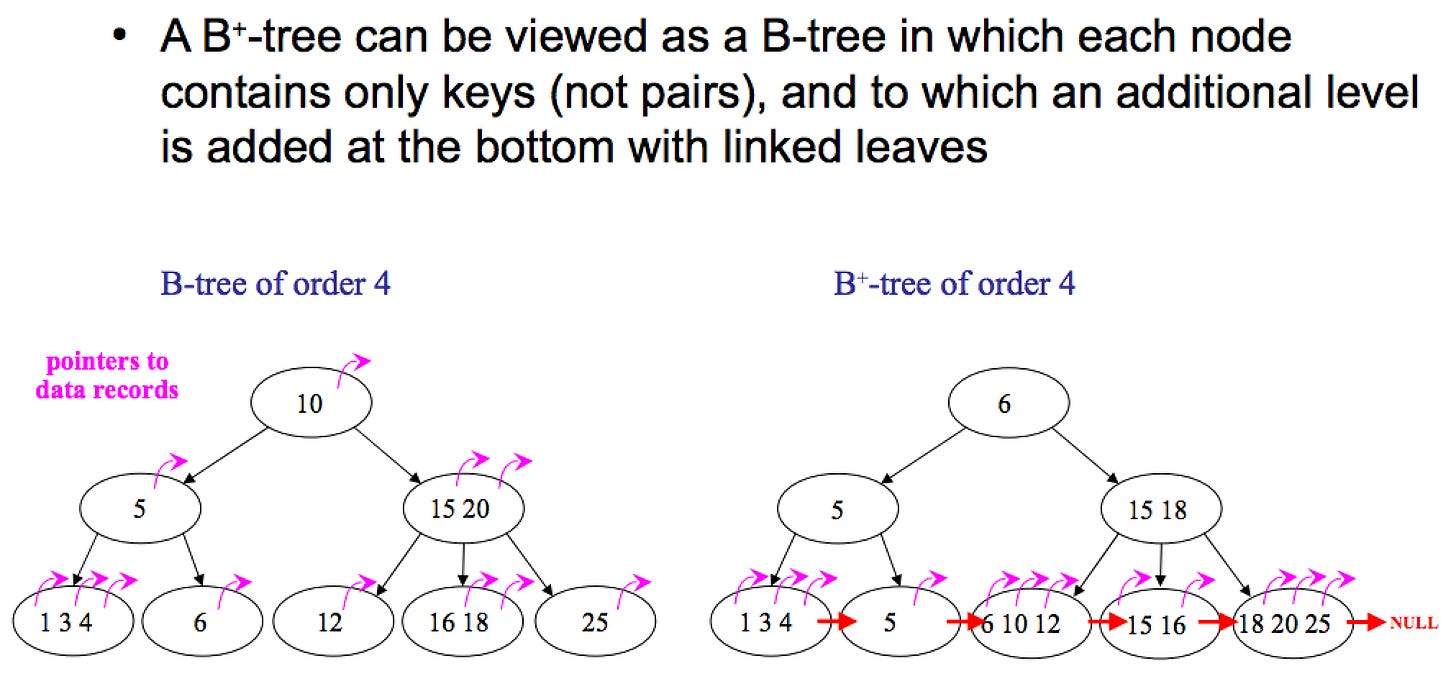

Page-oriented storage engines are built on B-trees, which are the indexing structure used in relational databases. B-trees keep the key-value records sorted by keys, allowing for efficient key-value lookups and range queries. This breaks the database into fixed sized blocks or pages on disk, traditionally 4KB.

When searching a B-tree, you start at the root page, which contains multiple keys and references to child pages, and traverse down the tree until you find your requested record. The branching factor is the number of references to child pages.

Both updates and writes search through the tree for a leaf page containing the key location. If there isn't enough free space in the page, it is split into two half-full tree to keep the tree balanced. A B-tree with n keys will always have a depth of O(log n).

Write operations overwrite pages on disk, compared to LSM-trees which only append to files.

If the database crashes with some pages written, you’ll end up with a corrupted index. We solve this with a write-ahead log (WAL, also know as the redo log).

We can also have concurrent access with multiple threads doing concurrency control with latches (lightweight locks) on nodes in the tree internal data structures.

An additional common extension are B+ trees, which only store data at leaf nodes and pointers to other leafs for easy range queries.

Comparing B-trees and LSM-trees

LSM-trees are typically faster for writes and B-trees are faster for reads. LSM-trees are slower for reads because they have to check different data structures, and SSTables have to do compaction.

The Advantages of LSM-trees are:

Higher write throughput due to lower write amplification. SSDs can only overwrite blocks a limited number of times before wearing out. LSM-tree writes are mostly sequential appends.

LSM-trees compress better B-trees tend to leave disk space unused due to fragmentation.

The Disadvantages of LSM-trees are:

The background compaction process can interfere with the performance of reads and writes.

On B-trees, each key exists in exactly one place in the index. This offers strong transaction isolation using latches on whole ranges of keys.

Other Indexing Structures

So for we’ve looked at indexes for log-structured and page-oriented storage engines. A primary index uniquely identify one row in a relational table, or one document in a document database. This is done from the key mapping to the key-value record (row/document).

You can also use a secondary index, which is similar but not necessarily unique. These indexes can either map to a list of matching rows, or adding an additional unique id entity to the row.

Multi-Column Indexes

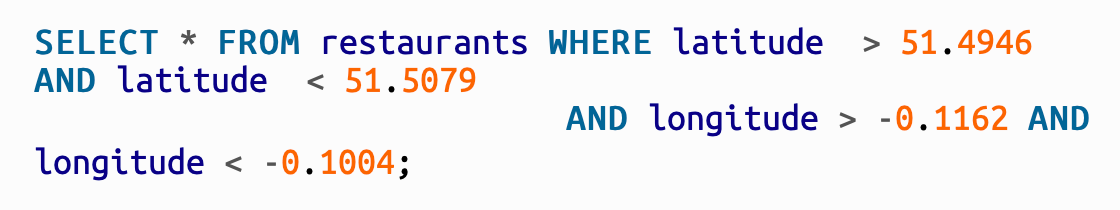

Multi-Column Indexes allow you to index using multiple columns as a key. There are two ways to do it. Concatenated indexes indexing as a tuple like (lastname, firstname). Multi-dimensional indexes are more general and useful for geospatial data, which B-trees and LSM-trees cannot do efficiently. This is done using an R-tree.

Could You Keep Everything in Memory?

So far we’ve looked at storage using disks and indexing using memory. While Disks have two significant advantages: they are durable, and they have lower cost per gigabyte than RAM, RAM is quickly becoming affordable.

You can indeed keep everything in memory and examples of in-memory systems are Spark, MemSQL, and in-memory caches such as Redis and Memcached.

Transaction Processing, Analytics, and Data Warehouses

Why do we need log-structured storage engines and page-oriented storage engines? Why couldn’t we use only one of them? Database operations have evolved to fall under two processes: transaction processing or analytics processing.

OLTP: Online Transaction Processing. Transactions are a group of reads and writes that form a logical unit and typically return a small number of records. These are necessary for bank transactions.

OLAP: Online Analytics Processing. These queries are performed by business analysis that perform aggregation over a huge number of record.

Initially, OLTP and OLAP were both done on the same database. However analytics workloads, such as aggregation, on a database are expensive so data warehouses are a separate specialized database for analytics usage. Data is extracted from OLTP databases and loaded into the warehouse using an Extract-Transform-Load (ETL) process.

Some examples are Amazon Redshift, Snowflake, Spark SQL, or Facebook Presto.

Column Oriented Storage

In most OLTP databases, storage is laid out in a row-oriented fashion. Analytics queries often query across few columns but all rows.

Column Oriented Storage databases for OLAP operations store all the values from each column together instead. If each column is stored in a separate file, a query only needs to read and parse the columns that it is interested in.

Column Oriented Storage databases use LSM-trees. All writes go to an in-memory store first, where they are added to a sorted structure and prepared for writing to disk.

Cassandra and BigTable are column families databases. Within each column family, they store all the columns from a row together, along with a row key.

Conclusion

This summarizes the internal storage and retrieval in databases as we compare log-structured and page-oriented storage engines in their use cases of OLTP and OLAP operations.

Next week, we’ll look at the encoding and dataflow of data as it goes through databases, services, and message queues. Stay tune for more and share if you like this article!